20min Handson ZFS

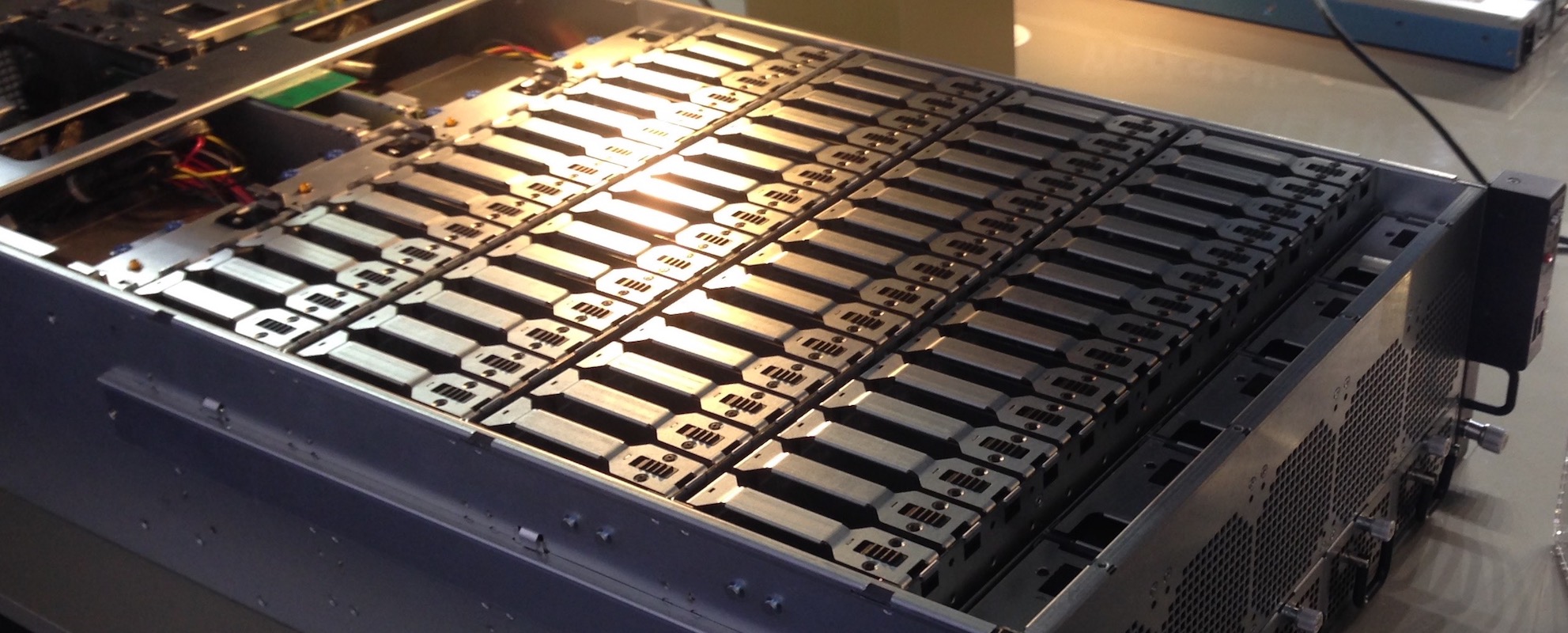

Tech . Tooling . ZFSZFS is often called the last word in file systems.

It is a new approach to deal with large pools of disks originally invented by Sun.

It was later then ported to FreeBSD, MacOS (only 10.5) and Linux.

This text should show some of the basic feature of ZFS and demonstrate them handson by example.

Prerequisites

-> FreeBSD

-> Solaris

-> MacOS (only Userland)

In our example we use

SunOS openindiana 5.11 oi_151a5 i86pc i386 i86pc Solaris.

as an environment.

But most commands also work on the other systems.

Since we do all the work within a VM, our commands have the pattern:

Input VM:

command

Output VM:

result

Pool Creation

The first information we need is the number of disk, present in our environment.

There are several ways to get a basic disk listing. Under (Open-)Solaris this can be done with:

Input VM:

format < /dev/null

Output VM:

AVAILABLE DISK SELECTIONS: 0. c4t0d0 /pci@0,0/pci8086,2829@d/disk@0,0 1. c5t0d0 /pci@0,0/pci1000,8000@16/sd@0,0 2. c5t1d0 /pci@0,0/pci1000,8000@16/sd@1,0 3. c5t2d0 /pci@0,0/pci1000,8000@16/sd@2,0 4. c5t3d0 /pci@0,0/pci1000,8000@16/sd@3,0 5. c5t4d0 /pci@0,0/pci1000,8000@16/sd@4,0 6. c5t5d0 /pci@0,0/pci1000,8000@16/sd@5,0 7. c5t6d0 /pci@0,0/pci1000,8000@16/sd@6,0 8. c5t7d0 /pci@0,0/pci1000,8000@16/sd@7,0

about Pools

With ZFS it is possible to create different kinds of pools on a specific number of disk.

You can also create several pools within one system.

The following Pools are possible and most commonly used:

| Type | Info | Performance | Capacity | Redundancy | Command |

|---|---|---|---|---|---|

| JBOD | Just a bunch of disks. In theory it is possible to create on pool for each disk in the system, although this is not quite commonly used. | of each disk | of each disk | – | zpool create disk1 pool1 zpool create disk1 pool2 … |

| Stripe | This is equivalent to RAID0, the data is distributed over all disks in the pool. If one disks fails, all the data is lost. But you can also stripe several Pools (e.g. two raidz pools) to have better redundancy. | very high | N Disks | no | zpool create disk1 disk2 pool1 |

| Mirror | This is equivalent to RAID1, the data is written to both disks in the Pool. Restoring a pool (resilvering) is less efficient, since the data needs to be copied from the remaining disk. | normal | N-1 Disks | +1 | zpool create mirror disk1 disk2 pool1 |

| Raidz | This is equivalent to RAID5. One disk contains the parity data. Restoring a pool (resilvering) is less efficient, since the data needs to be copied from the remaining disks. | high | N-1 Disks | +1 | zpool create raidz disk1 disk2 disk3 pool1 |

| Raidz2 | This is equivalent to RAID6. Two disks containing the parity data. Restoring a pool (resilvering) is less efficient, since the data needs to be copied from the remaining disk with parity data. | high | N-2 Disks | +2 | zpool create raidz2 disk1 disk2 disk3 disk4 pool1 |

| Raidz3 | There is no real equivalent existing for that one. You have basically three disks with parity data. | high | N-3 Disks | +3 | zpool create raidz3 disk1 disk2 disk3 disk4 disk5 pool1 |

You can also add hot-spares for a better fallback behaviour, SSDs for caching reads (cache) and writes (logs).

I also created a benchmark with various combinations.

create a basic Pool (raidz)

Input VM:

zpool create tank raidz c5t0d0 c5t1d0 c5t2d0 ... zpool status

Output VM:

pool: tank

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

c5t0d0 ONLINE 0 0 0

c5t1d0 ONLINE 0 0 0

c5t2d0 ONLINE 0 0 0

errors: No known data errors

(Raid5)

You already can access the newly created pool:

Input VM:

ls -al /tank

Output VM:

... total 4 drwxr-xr-x 2 root root 2 2012-10-23 22:02 . drwxr-xr-x 25 root root 28 2012-10-23 22:02 ..

create a basic Pool (raidz) with one spare drive

Input VM:

zpool create tank raidz1 c5t0d0 c5t1d0 c5t2d0 spare c5t3d0 ... zpool status

Output VM:

pool: tank

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

c5t0d0 ONLINE 0 0 0

c5t1d0 ONLINE 0 0 0

c5t2d0 ONLINE 0 0 0

spares

c5t3d0 AVAIL

errors: No known data errors

List the availibe Layout

Input VM:

zpool list

Output VM:

NAME SIZE ALLOC FREE EXPANDSZ CAP DEDUP HEALTH ALTROOT tank 1,46G 185K 1,46G - 0% 1.00x ONLINE -

*The 1,5G does not reflect the real availible space. If you copy a 1G File to the Pool it will use 1,5G (1G + 512M Parity).

create a stripped pool

Input VM:

zpool create tank raidz1 c5t0d0 c5t1d0 c5t2d0 raidz1 c5t4d0 c5t5d0 c5t6d0

Output VM:

pool: tank

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

c5t0d0 ONLINE 0 0 0

c5t1d0 ONLINE 0 0 0

c5t2d0 ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

c5t4d0 ONLINE 0 0 0

c5t5d0 ONLINE 0 0 0

c5t6d0 ONLINE 0 0 0

errors: No known data errors

(Raid50 = Raid5 + Raid5)

deal with disk failures

Input VM:

zpool create tank raidz1 c5t0d0 c5t1d0 c5t2d0 spare c5t3d0

Failure Handling

Input Host:

echo /dev/random >> 1.vdi

Wait for it…

or Input VM:

pool: tank

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: http://illumos.org/msg/ZFS-8000-9P

scan: resilvered 66K in 0h0m with 0 errors on Tue Oct 23 22:14:19 2012

config:

NAME STATE READ WRITE CKSUM

tank DEGRADED 0 0 0

raidz1-0 DEGRADED 0 0 0

spare-0 DEGRADED 0 0 0

c5t0d0 DEGRADED 0 0 64 too many errors

c5t3d0 ONLINE 0 0 0

c5t1d0 ONLINE 0 0 0

c5t2d0 ONLINE 0 0 0

spares

c5t3d0 INUSE currently in use

errors: No known data errors

Input VM:

zpool clear tank ... zpool detach tank c5t0d0 zpool replace tank c5t0d0 c5t7d0

Create File systems

Input VM:

zfs create tank/home zfs create tank/home/user1 ... chown -R user:staff /tank/home/user1 ... zfs get all tank/home/user1 ... zfs set sharesmb=on tank/home/user1 ... zfs set quota=500M tank/home/user1

Copy File from MacOS into SMB Share.

Snapshot

Input VM:

zfs snapshot tank/home/user1@basic ... zfs list ... zfs list -t snapshot

Output VM:

NAME USED AVAIL REFER MOUNTPOINT rpool1/ROOT/openindiana@install 84,0M - 1,55G - tank/home/user1@basic 0 - 42,6K -

Input VM:

zfs snapshot -r tank/home@backup ... zfs list -t snapshot

Output VM:

NAME USED AVAIL REFER MOUNTPOINT rpool1/ROOT/openindiana@install 84,0M - 1,55G - tank/home@backup 0 - 41,3K - tank/home/user1@basic 0 - 42,6K - tank/home/user1@backup 0 - 42,6K -

Input VM:

zfs clone tank/home/user1@basic tank/home/user2

Output VM:

tank/home/user2 1,33K 894M 70,3M /tank/home/user2

Restoring Snapshots

Delete ZIP File in SMB-Share.

Input VM:

ls -al tank/home/user1 ... zfs rollback tank/home/user1@backup

Output VM:

ls -al tank/home/user1

Resizing a Pool

Input VM:

zpool list ... zpool replace tank c5t0d0 c5t4d0 zpool replace tank c5t1d0 c5t5d0 zpool replace tank c5t2d0 c5t6d0 ... zpool scrub tank ... zpool list

Output VM:

NAME SIZE ALLOC FREE EXPANDSZ CAP DEDUP HEALTH ALTROOT tank 1,46G 381K 1,46G 1,50G 0% 1.00x ONLINE -

Input VM:

zpool set autoexpand=on tank

Using ZFS for Backups

Bash-Script

rsync -avrz --progress --delete /Users/user root@nas.local::user-backup/ backupdate=$(date "+%Y-%m-%d") ssh root@nas.local zfs snapshot tank/backup@$backupdate

Related

Archives

- August 2025

- November 2023

- February 2023

- January 2023

- April 2020

- January 2018

- December 2017

- May 2017

- February 2016

- September 2015

- December 2014

- August 2014

- June 2014

- March 2014

- February 2014

- September 2013

- August 2013

- July 2013

- November 2012

- October 2012

- September 2012

- June 2012

- May 2012

- April 2012

- March 2012

- February 2012

- January 2012

- December 2011

- November 2011

- October 2011

- August 2011

- July 2011

- June 2011

- May 2011

- January 2011

- August 2010

- July 2010

- June 2010

- May 2010

- January 2010

- November 2009

- October 2009

- September 2009

- July 2009

- June 2009

- May 2009

- April 2009

- March 2009

- February 2009

- January 2009

- November 2008

- October 2008

- September 2008

- August 2008

- July 2008

- June 2008

- May 2008

- March 2008

- February 2008

- January 2008

- December 2007

- November 2007

- October 2007

- September 2007

- August 2007

- July 2007

- June 2007

- May 2007

- March 2007

- February 2007

- January 2007

- December 2006

- November 2006

- September 2006

- June 2006

- May 2006

- April 2006

- March 2006

- February 2006

- January 2006

Calendar

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

Leave a Reply